Audio and Multimedia Researchers Participate in D-CASE Challenge

On October 22, Audio and Multimedia researchers presented a system that analyzes audio recordings and identifies their “scenes” – descriptions of where or in what circumstances they were recorded, such as a supermarket and a restaurant – at the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics in New Paltz, New York. The research was carried out by Benjamin Elizalde, Howard Lei, and Gerald Friedland of Audio and Multimedia, along with Nils Peters, alum of ICSI's visiting agreement with the DAAD of Germany, and submitted as an entry for the IEEE-sponsored Detection and Classification of Acoustic Scenes and Events (D-CASE) challenge.

On October 22, Audio and Multimedia researchers presented a system that analyzes audio recordings and identifies their “scenes” – descriptions of where or in what circumstances they were recorded, such as a supermarket and a restaurant – at the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics in New Paltz, New York. The research was carried out by Benjamin Elizalde, Howard Lei, and Gerald Friedland of Audio and Multimedia, along with Nils Peters, alum of ICSI's visiting agreement with the DAAD of Germany, and submitted as an entry for the IEEE-sponsored Detection and Classification of Acoustic Scenes and Events (D-CASE) challenge.

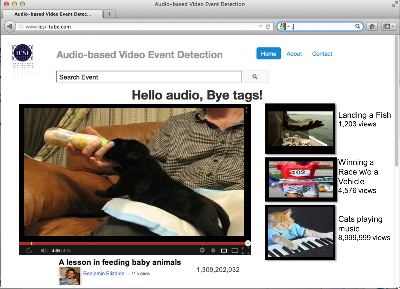

Audio and Multimedia researchers developed the system as part of ICSI's work under the ALADDIN Program, sponsored by IARPA. The program funds teams to build systems that use analysis of video, audio, and other modalities to detect “events,” also known as “scenes” – targets for the project include "making a sandwich" and "wedding proposal" – in user-generated videos, such those uploaded to YouTube. These videos compose a corpus that grows by hundreds of hours per minute, has few controls on length, quality, overlap, or overall acoustics, and therefore presents unique challenges for automatic analysis. ICSI, as a member of a multi-institutional ALADDIN team led by SRI Sarnoff, works on the analysis of non-speech audio - sounds such as dogs barking and doors slamming as well as environmental noise such as traffic and running water.

"With multimedia video content analysis," Benjamin said, "we are able to open the black box of video." The ultimate goal of multimedia event detection is to be able to automatically find videos that depict any event, but for now, evaluations like IEEE's D-CASE this October ask participants to find audio clips that match a defined list of characteristics. D-CASE had three components: Sound Detection using live recordings from an office; Sound Detection using synthetic audio from an office; and the Scene Classification task, which our researchers participated in and which required teams to classify audio as belonging to one of 10 scenes, such as a busy street and a restaurant. The organizers provided 100 30-second clips in total, recorded in the London area, of which participants used 90 as training data and 10 as test data - the audio to be classified. In subsequent tests, the test clips were swapped out for others from the training pool.

ICSI's system analyzed the acoustics of the 90 clips, represented the data in an i-vector, and compared this information against 10 test clips represented in an i-vector too. While its accuracy in general was similar to the baseline system, its performance classifying outdoor scenes (busy street, open air market, park, and quiet street) was about 50 percent better than the baseline. Also, its variance - the difference between the best and worst performance - was the lowest of any system submitted for the challenge. Benjamin said that one problem the i-vector had with the challenge was that it requires more data – the system normally trains on hundreds of recordings.

Benjamin also said that the team is going to explore using other audio features in the system. For now, the team has only been using mel-frequency cepstral coefficients (MFCCs) in its audio analysis; he said other teams had success using different features and that ICSI's team would likewise explore features other than MFCCs. The work is part of a broader effort by Audio and Multimedia researchers to use different modalities, such as vision, audio, and text, to analyze multimedia, particularly user-generated content like YouTube videos.

Related Paper: "An I-Vector Based Approach for Audio Scene Detection." B. Elizalde, H. Lei, G. Friedland, and N. Peters. Proceedings of the IEEE AASP Challenge: Detection and Classification of Acoustic Scenes and Events (D-CASE) at the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2013), New Paltz, New York, October 2013. Available online at https://www.icsi.berkeley.edu/icsi/publication_details?n=3600.