Featured Research: Privacy

Back to Gazette, Fall 2014

Credit: Mitch Blunt

In June 2013, the Guardian published its first report based on documents provided by former National Security Agency contractor Edward Snowden. Just more than a year later, privacy seems to be on everyone’s mind. In a survey conducted in January by Truste, a data management company that prioritizes consumer safety and privacy, 74 percent of users said they were more worried about online privacy than they had been a year earlier. Pew Research found that half of Americans were worried about their personal information on the Internet in 2013, up from a third in 2009. A quick Google search for “online privacy” calls up articles with ominous headlines such as “Online Privacy is Dead.” But what exactly is online privacy, and why is it important?

“It depends on who you ask and what they know,” says Blanca Gordo, an artificial intelligence researcher. Among other things, she’s been working with the Teaching Privacy team, which has built a web site that explains what happens to personal information when it goes online, along with a set of apps to demonstrate this. The team comprises researchers from three ICSI groups and from UC Berkeley. “This is the kind of work you can’t do alone. It’s complex, but that doesn’t mean it can’t be articulated.”

A Pedagogy of Privacy

Teaching Privacy was first funded through a supplementary grant to the the Geo-Tube Project, which is led by Gerald Friedland, director of Audio and Multimedia research, and Robin Sommer of Networking and Security. In this project, researchers are studying the ways in which an attacker can aggregate public and seemingly innocuous information from different media and web sites to harm users. The project seeks to help users, particularly younger ones, understand the risks of sharing information online and the control – or lack thereof – they have over it.

Friedland and Sommer began studying this topic in 2010, when they coined the word “cybercasing” to describe the use of geo-tagged information available online to mount attacks in the real world. In a paper presented at the USENIX Workshop on Hot Topic in Security in August 2010, they showed that it was easy to discover where many photos and videos posted online were taken by extracting metadata with highly precise location information, known as geo-tags, that are embedded by many higher-end digital cameras and smart phones. They then cross-referenced these with other information such as the text accompanying a photo in a Craigslist ad to find users who might be away from the house during the day or who had gone on an extended vacation. In one search of YouTube videos, for example, the researchers were able to find users with homes near downtown Berkeley by searching the embedded geo-location data. They then searched for videos posted by the same users that had been filmed over 1,000 miles away. Within fifteen minutes, the researchers found a resident of Albany, California who was vacationing in the Caribbean, along with a dozen other users who might be vulnerable to burglary.

Friedland says that the interest sparked by this research motivated him to seek funding for the Geo-Tube project. “In 2010, people weren’t aware that this could be done,” he said. “The issue was to simply raise awareness.”

Much of Audio and Multimedia’s work over the last four years has been to show what can be inferred about users from relatively little information. In ongoing research, for example, they show how it is increasingly possible to estimate where videos were shot even if they are not geo-tagged, by looking at text data such as titles and tags, visual cues such as textures and colors, and sounds such as bird songs and ambulance sirens. In other work, they’ve found that comparing the audio tracks of different videos can show whether they were uploaded by the same person – meaning that online accounts can be linked even if they are created under different names.

But four years and dozens of released NSA documents later, Friedland says, the issue is no longer just informing people that there are privacy risks, but also suggesting mitigations.

“Our expertise is to answer the questions, What can you do technically to protect privacy?” he said. If, for example, you take a photograph of yourself but you don’t want anyone to be able to tell where you are or whom you’re with: “How much do you need to change your face or the background to maintain privacy? You interview Snowden and you blur the background; can you use background noise to identify the city he’s in?”

The Teaching Privacy team works to give students the knowledge and tools to protect their privacy online. The effort, which will continue on as Teaching Resources for Online Privacy Education (TROPE) led by Friedland and Networking and Security researcher Serge Egelman, has developed 10 principles for social media privacy, with technical explanations written for a lay audience and real-life examples. The team has also built several apps that show users exactly what their social media accounts are sharing. The Ready or Not? app, released in August 2013, takes GPS data embedded in Twitter and Instagram posts to create a heat map of where users have been posting from and when.

The Teaching Privacy team works to give students the knowledge and tools to protect their privacy online. The effort, which will continue on as Teaching Resources for Online Privacy Education (TROPE) led by Friedland and Networking and Security researcher Serge Egelman, has developed 10 principles for social media privacy, with technical explanations written for a lay audience and real-life examples. The team has also built several apps that show users exactly what their social media accounts are sharing. The Ready or Not? app, released in August 2013, takes GPS data embedded in Twitter and Instagram posts to create a heat map of where users have been posting from and when.

The team comprises not only computer scientists, who design the apps and ground the principles in technical research, but also educators and social scientists like Gordo. In separate work, she’s been evaluating the California Connects program, which sought to spread broadband adoption among disconnected populations. Her and other artificial intelligence researchers’ role in Teaching Privacy, she says, is to ground the work in an understanding of the ways people think.

“There is no pedagogy for digital functioning,” she said. “This is why I’m interested in building a language to teach technology.”

One difficulty facing those who want to spread Internet adoption – or, in the case of Teaching Privacy, educate young users about how privacy works – is that few data exist showing how new users understand technology. “We have a responsibility to ground a baseline of understanding by paying attention to what they’re thinking, how they’re processing information,” she said. “We have to understand how they think.”

“A Very Nuanced Issue”

Serge Egelman, who joined Networking and Security in 2013, has also been working on human factors in privacy. In one project, he is investigating how certain individual differences (e.g., personality traits) correlate with privacy preferences and security behaviors; the goal is to build systems that automatically adapt to user preferences. For example, an operating system could analyze behaviors on the computer and on the web, learn a user’s privacy preferences, and then automatically change website privacy preferences based on those inferred preferences.

He points to default privacy settings on web sites like Facebook, which are the same for all people who sign up at the same time. “Obviously, everyone doesn’t have the same privacy preferences,” he said. “So, can we look at other behaviors to infer your default preferences?”

Of course, these individualized preferences would require human intervention – you would need to tweak who sees posts you’re tagged in, for example – but they would be a “vast improvement over the default.”

He works with cognitive psychologist Eyal Pe’er of Bar-Ilan University, Israel, to conduct online surveys that measure classic psychometrics, such as extraversion, neuroticism, and risk aversion. They also ask about privacy and security behaviors. In some surveys, participants are asked about increasingly sensitive information, a technique borrowed from behavioral economics. The point at which participants begin refusing to answer gives some insight into how open they are.

“There’s a spectrum of privacy preferences,” he said. “It’s a very nuanced issue.”

In addition to his position at ICSI, Egelman is a researcher at UC Berkeley’s Electrical Engineer and Computer Sciences Department. There, he is working on a project that investigates how third-party mobile apps use data, with the ultimate goal of giving users the ability to make more fine-grained decisions. He and his colleagues are investigating privacy alerts for apps that chart a middle course between two prevailing practices, one that provides too much information and one that provides too little. On Android phones, for example, every time users download an app, the phone provides a list of the personal data that the app may collect, choice of discontinuing the installation if they do not agree to granting the application access. This is given without context, however. Take GPS, for example. How does an app use the phone’s GPS data? Is it for a legitimate and helpful location-based service, or is it simply to monetize the data? With this approach, the user becomes habituated to accepting the warnings; users click through the list automatically. “It’s the boy-who-cried-wolf syndrome,” he said.

On the other hand, apps for the Apple iOS platform provide very little information, and because the approval process for iOS apps is so opaque, it’s difficult to understand how collected data are used at all.

He and his colleagues are investigating what aspects of privacy users actually care about and comparing that with situations where those aspects are implicated. The goal is to infer when data use will concern users users and then only show privacy warnings during those situations. “We’ve done experiments, and we’ve found that given a practice, some people view this as an encroachment on privacy, while others view it as a desirable feature of an app.”

The Paradox of the Internet

Istemi Ekin Akkus, a fourth-year PhD student at the Max Planck Institute for Software Systems in Kaiserslautern, Germany, who visited Networking and Security this spring and summer, points out that there is a paradox at the heart of the web: the Internet economy relies upon the trust of customers and also upon data about them that they may not want revealed. Analytics, or information about how users browse the web and use mobile apps, provides companies with important customer data that enable ads targeted at customer interests. The advertising industry says these ads are much more effective than traditional advertising, and Akkus says their high revenue allows web sites that display them to provide content for free.

Akkus is interested in developing technologies that both prevent the tracking of users and sustain the online economy. At ICSI, Akkus worked on the Priv3 Firefox extension, which was initially released in 2011. The extension stops certain social media sites from knowing when you’ve visited a page. You’ve probably noticed, while browsing the web, the social widgets that let you post an article to Facebook or Twitter – and perhaps failed to notice, since it’s done invisibly, that those sites are tracking your activity if you’re logged in. This is done through cookies, small text files that sites store on your web browser. Say you log into Twitter and then go to a news site that allows you to tweet the article directly from the page. Your browser downloads the social widget from Twitter’s web site. While doing so, it also sends the cookie Twitter has stored on your computer when you logged in to Twitter. Upon receiving this cookie, Twitter knows you’ve visited the page even if you choose not to tweet the article. Priv3 stops Twitter, along with Facebook, LinkedIn, and Google+, from receiving such cookies right away: it waits until you interact with the widget (by liking the article on Facebook, for example, or sharing it on your LinkedIn profile). In this way, the user gets to enjoy the benefits of social widgets as well as protection from third parties silently tracking browsing.

While useful, Priv3 depends on a blacklist of specific social media sites, which must be maintained as new social media sites gain popularity. In addition, cookies are used by more than just social media sites. In 2012, the Berkeley Center for Law and Technology found that all of the most popular 100 web sites used cookies, more than two-thirds of which came from third parties. These cookies follow users from site to site, allowing companies to keep track of their interests (at least, as inferred from their web browsing history) and place ads that are most relevant to potential customers. This may end up revealing more than users want to share. Akkus, with other members of the group, are working to extend Priv3 to all third-party tracking without the need of a blacklist. With this generalization, the tracking by these third parties using cookies will be mostly ineffective: although they will be able to set new cookie values, they will not be able to receive them unless the user wants them to.

While useful, Priv3 depends on a blacklist of specific social media sites, which must be maintained as new social media sites gain popularity. In addition, cookies are used by more than just social media sites. In 2012, the Berkeley Center for Law and Technology found that all of the most popular 100 web sites used cookies, more than two-thirds of which came from third parties. These cookies follow users from site to site, allowing companies to keep track of their interests (at least, as inferred from their web browsing history) and place ads that are most relevant to potential customers. This may end up revealing more than users want to share. Akkus, with other members of the group, are working to extend Priv3 to all third-party tracking without the need of a blacklist. With this generalization, the tracking by these third parties using cookies will be mostly ineffective: although they will be able to set new cookie values, they will not be able to receive them unless the user wants them to.

Nicholas Weaver, a senior researcher in Networking and Security and a self-described paranoid, disputes that the Internet economy necessarily depends on the collection of browsing data. “There’s tons of proof that you can do advertising without using tracking and still make money at it,” he said, pointing to television and print ads as examples. “They don’t actually need to know that I visited site A when I visit site B. All they need to know is that I’m the kind of person who would visit site B.”

“In some ways, it’s a failure of the Internet ecosystem that it relies on such aggressive tracking because of the pushback,” he said. For example, many people use ad-blocking technologies, like AdBlock, not because they dislike the ads but because they are worried about tracking.

Government Surveillance

Weaver says ads, particularly ad banks used by smartphone apps, present another concern: according to documents leaked by Snowden, the U.S. government “piggybacks” on the tracking information sent by ads, such as the phone’s location. This information can be correlated with, say, an Instagram account that includes the user’s name.

Weaver has been focusing some of his paranoia on the Snowden revelations. He sees three major concerns with the scope and depth of of NSA surveillance. First, it carries the potential for abuse. “Look at what J. Edgar Hoover did. What would Hoover have been able to accomplish with the NSA’s level of surveillance?” he said.

The first Snowden disclosure, on June 5, 2013, showed that the NSA had been collecting the phone records of Verizon customers, regardless of whether they were suspected of wrongdoing. “It is vastly dangerous to have that data in raw form,” said Weaver. “Give me that data and I will have a blackmail target in every senate office.”

Fortunately, he says, the NSA has used this data “remarkably” responsibly. But the revelations raise a second concern: many techniques used by the NSA can be done with small, off-the-shelf hardware.

“This is what really worries me, that the replicability is so easy that you can do it almost anywhere,” he said. “The only limitation on adversaries is vantage point.”

Russia already uses a system similar to one NSA program revealed by Snowden; in 2013, the U.S. State Department warned travelers to the Winter Olympics in Sochi about surveillance, recommending that “essential devices should have all personal identifying information and sensitive files removed or ‘sanitized.’” Weaver also said the Great Firewall of China, which is now used to censor the country’s Internet access, could easily be used for surveillance.

Russia already uses a system similar to one NSA program revealed by Snowden; in 2013, the U.S. State Department warned travelers to the Winter Olympics in Sochi about surveillance, recommending that “essential devices should have all personal identifying information and sensitive files removed or ‘sanitized.’” Weaver also said the Great Firewall of China, which is now used to censor the country’s Internet access, could easily be used for surveillance.

Not only is this level of surveillance easy, but it now carries the imprimatur of the U.S. Weaver pointed to reports last year that the agency had spied on German Chancellor Angela Merkel’s cell phone in addition to other members of the European Union. “The NSA has given the permission to do this,” he said. “This acts as an open invitation.”

But even if the technology is not abused either by the U.S. or by foreign adversaries, Weaver says, the knowledge that this information is being collected has a chilling effect on Internet use. “It affects us through self-censorship,” he said. “If there is a possibility, however remote, that a disclosure may cause you damage, and there’s a worry about that, it changes your behavior, often to your detriment.”

Friedland, the Audio and Multimedia director, says the chilling effect, and the potential for abuse, are particularly problematic in a free country. “That’s why secrets are a fundamental part of our democracy,” he said. “There needs to be a point where you can switch off the record.”

Technology to Repress

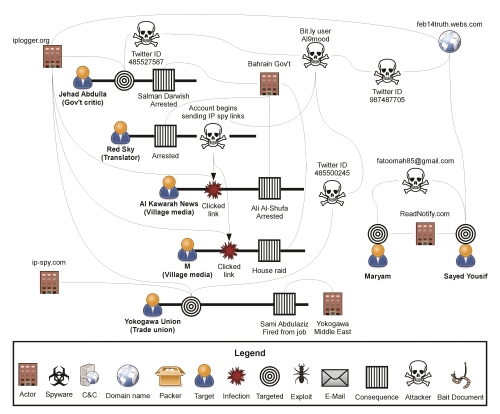

Bill Marczak, a PhD student at UC Berkeley and a researcher in Networking and Security, has been studying how governments in what are not considered free countries use technology to repress activists, journalists and other critics of their governments. Marczak, Networking and Security Director Vern Paxson, and members of the the Citizen Lab at the University of Toronto’s Munk School of Global Affairs have been found that the governments in Bahrain, Syria, and the United Arab Emirates use malware to identify and attack activists. A paper describing the research was presented at the USENIX Security Symposium in August.

Marczak wants to bring international media attention to this practice and develop technical solutions and training to give activists the ability to protect themselves against cyber-attacks from regimes.

“I bring a certain type of research which is so often missing from activism,” he said. He noted that activist research often lacks rigorous evidentiary standards.

Marczak’s interest in the Middle East dates back to the three and a half years he spent in Bahrain as a high school student. “When you go to high school in a certain place, you get to know people there,” he said. “It really becomes part of who you are.”

During the Arab Spring uprisings, which came to a head in Bahrain on February 14, 2011, the country’s government began increasing its use of physical weapons to fight protesters and turned to Western PR firms to burnish its reputation abroad. At the time, Marczak was interested in cloud computing and programming languages; he decided to switch his focus to political surveillance and electronic attacks.

He got to know several activists working in the Middle East on Twitter; in February 2012, they founded Bahrain Watch. The organization, which is independent and works on research and advocacy, operates “without any sort of structure, as an Internet collective.” Marczak says this helps them defend against attacks from repressive regimes: “Their minds are calibrated to look for activists as people who are organizing.”

Marczak also works with several human rights groups, including Privacy International, a charity based in London, and the Citizen Lab.

In April and May 2012, several activists interested in Bahrain were sent suspicious attachments, which, when Bill analyzed them, turned out to contain spyware developed by Gamma International, a company based in Germany. The spyware, FinFisher, can record passwords, log keystrokes, and take screenshots of the infected computer. Marczak and his colleagues traced the spyware’s command and control server to an address in Bahrain.

In order to identify other activity, they sent probes to servers and watched their behavior. They then scanned the Internet, searching for similar behavior. In addition to the server in Bahrain, they’ve found similar spyware associated with servers in six other countries considered to be ruled by oppressive regimes.

Marczak says the Citizen Lab in Toronto has been a particularly strong partner in identifying spyware. The lab, which primarily focuses on government surveillance and censorship systems, has a global network with connections to people in countries around the world.

At the USENIX Security Symposium in August, Marczak, Paxson, and Marczak’s colleagues at Citizen Lab and UC Los Angeles, presented a paper about their research into FinFisher, as well as the use of Hacking Team’s Remote Control System in the United Arab Emirates. Like Gamma, Hacking Team markets its software exclusively to governments. In Syria, they investigated the use of off-the-shelf remote access trojans. They found that the attacks were probably a factor in the year-long imprisonment of one activist and the publication of embarrassing videos of another, who was subsequently discredited.

The paper describes the “careful social engineering” used by attackers – in Bahrain, for example, several activists received email messages with attachments that were claimed to be reports of torture or pictures of jailed citizens. The paper also notes that this is the first step in a broader rigorous study of attacks target at individuals by governments.

Marczak said, “It’s difficult because researchers have little visibility into what activists are doing. I suspect more’s going on than we can see. Part of the goal is to gain better visibility and engagement with activists.”

What’s to be Done

Even without the risk of abuse on the level seen by Marczak and his colleagues, online privacy remains an important issue for most users of the Internet. Gordo says, “In different fields and different discourses, you’re finding this question: What is privacy?”

Gordo says Europeans and Americans differ in their approach to privacy. In Europe, privacy is a human right; “in the United States, it’s a contract. But if it’s a contract, people need to know what they’re signing on to.”

Egelman points out that Europeans and Americans also differ in how they view the government: Europeans believe that the government serves to protect them from corporations; Americans tend to worry more about the intrusion of government into the private sector.

He says, “It ultimately comes down to exerting control over your information: preventing its dissemination, controlling its dissemination, or simply being aware of how your information is disseminated.” For his work, in which he tries to make security warnings and privacy defaults responsive to individual users, “there is no universal truth. It’s about informed consent.”

Gordo says, “For me, it’s urgent because policy is being formulated, and the end user isn’t being taken into account.”

And after informing users and policy-makers, researchers will have a role in developing mitigations. Egelman is interested in the future of wearable computing – think Google Glass. “The approach we should be taking is, what are the issues we can imagine when these devices are pervasive, and what are the things we can do to mitigate those issues,” he said. “We should be thinking about these issues now. We can prevent issues down the road when the devices are pervasive.”

Friedland said, “Independent institutions like ICSI will play a role” in the work to secure privacy “They will go in and say, ‘Look, this is what we can do.’”

Teaching Privacy’s 10 Principles and its apps, Egelman’s work on inferred privacy preferences, Akkus’s work on Priv3, and several other projects are all part of that work. And you can always just heed Weaver’s simple advice:

“Encrypt everything.”