Audio and Multimedia Researchers Win ACM Grand Challenge

November 20, 2015

Using an application developed by the Audio and Multimedia Group and its collaborators, you can search a corpus of nearly 100 million photos and videos for media that relates to particular events, such as Occupy Wall Street protests or the eruption of the Icelandic volcano Eyjafjallajökull. The application analyzes metadata – that is, tags, time stamps, and location information – that accompanies the media, as as well as its visual and audio attributes. It then shows the frequency of photos and videos in a timeline and produces a heat map of where the events took place.

A search on Evento for the Eyjafjallajökull volcano eruption.

The application was built by Jaeyoung Choi, a researcher in the group, and Gerald Friedland, its director, along with collaborators at Oracle America Inc. and Delft University of Technology. They won the Grand Challenge at the ACM Multimedia Conference, held October 26 – 30 in Brisbane, Australia for it.

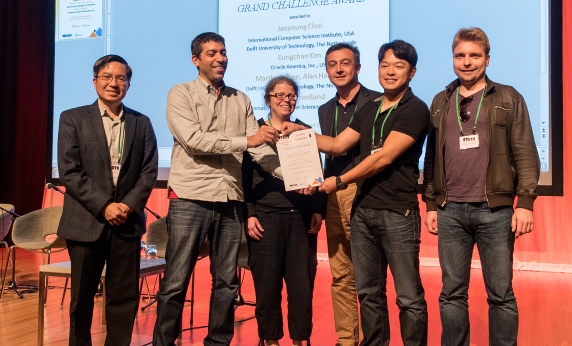

Jaeyoung Choi of the Audio and Multimedia Group accepts the ACM Multimedia Grand Challenge Award

The work is part of a broader effort, the Multimedia Commons Project, to develop and share sets of computed features and ground-truth annotations for the Yahoo Flickr Creative Commons 100 Million dataset (YFCC100M). That dataset contains around 99 million images and 800,000 videos released under Creative Commons licenses.

The first ACM Multimedia Grand Challenge, in 2009, was won by ICSI researchers Gerald Friedland, Adam Janin, and Luke Gottlieb. In 2013, Friedland founded the Audio and Multimedia Group to continue work on automatic analysis of media posted online.

The YFCC100M was released in 2014, and since then, members of the group have released several sets of features, or statistical representations of audio and visual characteristics, that they have automatically extracted from the dataset. These feature sets are released publically through the Multimedia Commons Project.